Snap OS now has in-app payments, a UI Kit, a permissions system for raw camera access, EyeConnect automatic colocation, and more.

What Are Snap OS, Snap Spectacles, And Snap Specs?

If you’re unfamiliar, the current Snap Spectacles are $99/month AR glasses for developers ($50/month if they’re students), intended to let them develop apps for the Specs consumer product the company behind Snapchat intends to ship in 2026.

Spectacles have a 46° diagonal field of view, angular resolution comparable to Apple Vision Pro, relatively limited computing power, and a built-in battery life of just 45 minutes. They’re also the bulkiest AR device in “glasses” form factor we’ve seen yet, weighing 226 grams. That’s almost 5 times as heavy as Ray-Ban Meta glasses, for an admittedly entirely unfair comparison.

But Snap CEO Evan Spiegel claims that the consumer Specs will have “a much smaller form factor, at a fraction of the weight, with a ton more capability”, while running all the same apps developed so far.

As such, what’s arguably more important to keep track of here is Snap OS, not the developer kit hardware.

Snap OS is relatively unique. While on an underlying level it’s Android-based, you can’t install APKs on it, and thus developers can’t run native code or use third-party engines like Unity. Instead, they build sandboxed “Lenses”, the company’s name for apps, using the Lens Studio software for Windows and macOS.

In Lens Studio, developers use JavaScript or TypeScript to interact with high-level APIs, while the operating system itself handles the low-level core tech like rendering and core interactions. This has many of the same advantages as the Shared Space of Apple’s visionOS: near-instant app launches, interaction consistency, and easy implementation of shared multi-user experiences without friction. It even allows the Spectacles mobile app to be used as a spectator view for almost any Lens.

Snap OS doesn’t support multitasking, but this is more likely a limitation of the current hardware than the operating system itself.

Since releasing Snap OS in the latest Spectacles kit last year, Snap has repeatedly added new capabilities for developers building Lenses.

In March the company added the ability to use the GPS and compass heading to build experiences for outdoor navigation, detect when the user is holding a phone, and spawn a system-level floating keyboard for text entry.

Snap Spectacles See Peridots Play Together & Now Use GPS

Six months in, Snap’s AR Spectacles for developers and educators just got big new features, including GPS support and multiplayer Peridot.

In June, it added a suite of AI features, including AI speech to text for 40+ languages, the ability to generate 3D models on the fly, and advanced integrations with the visual multimodal capabilities of Google’s Gemini and OpenAI’s ChatGPT.

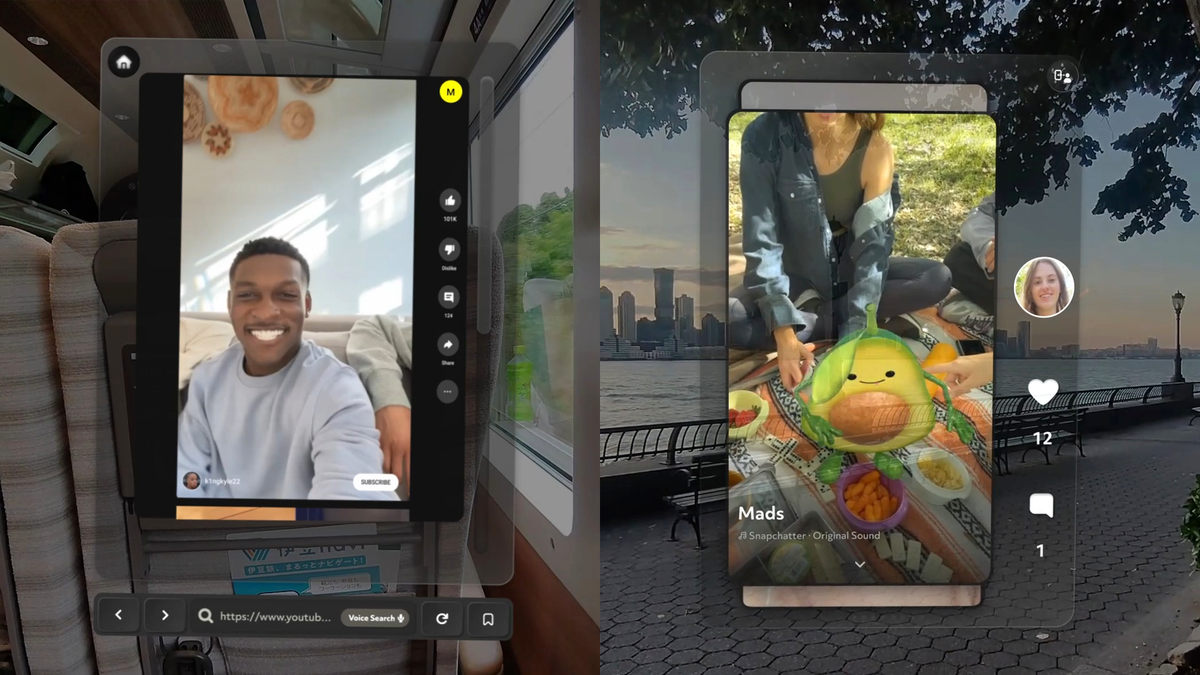

And last month, we went hands-on with Snap OS 2.0, which rather than just focusing on the developer experience, also rounds out Snap’s first-party software offering as a step towards next year’s consumer Specs launch. The new OS version adds and improves first-party apps like Browser, Gallery, and Spotlight, and adds a Travel Mode, to bring the AR platform closer to being ready for consumers.

Snap OS 2.0 Brings The AR Glasses Closer To Consumer-Ready

Snap OS 2.0 is out now, adding and improving first-party apps like Browser, Gallery, and Spotlight to bring the AR platform closer to being ready for consumers.

At Lens Fest this week, Snap detailed the new developer capabilities of Snap OS 2.0, including the ability for developers to monetize their Lenses.

Commerce Kit

Snap has repeatedly confirmed that all Lenses built for the current Spectacles developer kit will run on its consumer Specs next year. But until now it hasn’t really told developers why they’d want to build Lenses in the first place.

Commerce Kit is an API and payment system for Snap OS devices. Users set up a payment method and 4-digit pin in the Spectacles smartphone app, and developers can implement microtransactions in their Lenses. It’s very similar to what you’d find on standalone VR headsets and traditional consoles.

Given that Lenses themselves can only be free, this is the first way for Lens developers to directly monetize their work.

Commerce Kit is currently a closed beta available for US-based developers to apply to join.

Permission Alerts

Snap OS Lenses cannot simultaneously access the internet and raw sensor data – camera frames, microphone audio, or GPS coordinates. It’s been a fundamental part of Snap’s software design.

There are higher-level Snap APIs for computer vision, speech to text, and colocation, to be clear, but if developers want to run fully custom code on raw sensor data they lose access to the internet.

The prompt (left) and what bystanders see (right).

Now, Snap has added the ability for experimental Lenses to get both raw sensor access and internet access. The catch is that a permissions prompt will appear every time the Lens is launched, and the forward-facing LED on the glasses will pulse while the app is in use.

Snap says this Bystander Indicator is crucial to let people nearby know that they might be being recorded.

UI Kit

The Spectacles UI Kit (SUIK) lets developers easily add the same UI components as the Snap OS system.

Mobile Kit

The new Spectacles Mobile Kit lets iOS and Android smartphone apps communicate with Spectacles Lenses via Bluetooth.

Snap OS already lets developers easily use your iPhone as a 6DoF tracked controller via the Spectacles phone app, but the new Mobile Kit allows developers to build custom companion phone apps, or add this as a feature to their existing smartphone app.

“Send data from mobile applications such as health tracking, navigation, and gaming apps, and create extended augmented reality experiences that are hands free and don’t require Wi-Fi.”

Semantic Hit Testing

Semantic Hit Testing is a new API that lets Snap OS Lenses cast a ray, for example from the user’s hand, and figure out whether it hits a valid ground surface.

This can be used for instant placement of virtual objects, and is a developer feature we’ve seen arrive on multiple XR platforms now.

Snap Cloud

Snap Cloud is a back-end-as-a-service platform for Snap OS developers, delivered via a partnership with Supabase.

The service provides authentication via Snap identity, databases, serverless functions, real-time data communication and synchronization, and CDN object storage.

Snap Cloud is currently an Alpha release that requires developers to apply to be approved on a case-by-case basis.

EyeConnect

Snap OS already has the best system-level colocation support we’ve seen yet, letting you easily join a local multiplayer session with very little friction. When someone nearby is in a Lens that supports multiplayer, you’ll see their session listed in the main OS menu to join, and after a short calibration step you’re colocated.

With the new EyeConnect feature, Snap seems to be pushing even harder on the idea of low-friction colocated multiplayer.

According to Snap, EyeConnect directs Spectacles wearers to simply look at each other, and uses device and face tracking to automatically co-locate all users in a session without any mapping step.

For Lenses that place virtual elements at real world coordinates, a Custom Locations system is used that doesn’t support EyeConnect.